allama

Allama - LLM Testing and Benchmarking Suite

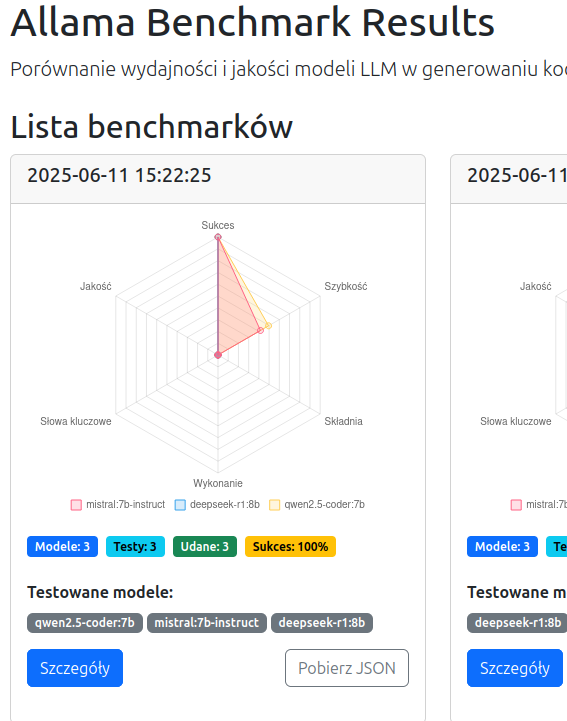

A comprehensive testing and benchmarking suite for Large Language Models (LLMs) focused on Python code generation. The project enables automatic quality assessment of generated code through various metrics and generates detailed HTML reports.

Features

- Automated Testing of multiple LLM models with configurable prompts

- Code Quality Assessment - syntax checking, execution, style, and functionality

- Detailed HTML Reports with metrics, charts, and comparisons

- Interactive Code Diff - visual comparison of code generated by different models

- Results Export to CSV and JSON for further analysis

- Highly Configurable - easily add new models and tests

- Multiple API Support - Ollama, local servers, cloud services

- Model Ranking based on performance and quality metrics

- Zero Configuration - automatically generates default config files when needed

- Benchmark Publishing - share your results with the community via the Allama server

- Local Results Storage - automatically saves results in timestamped folders

- Prompt Analysis - detailed information about prompts used in benchmarks

- Radar Charts - visual comparison of model performance across multiple metrics

Quick Start

1. Installation

Using Poetry (recommended)

# Clone the repository

git clone https://github.com/wronai/allama.git

cd allama

# Install dependencies

pip install poetry

poetry install

# Activate the virtual environment

poetry shell

Using pip

# Clone the repository

git clone https://github.com/wronai/allama.git

cd allama

pip install .

2. Model Configuration

Create or edit the models.csv file to configure your models:

model_name,url,auth_header,auth_value,think,description

mistral:latest,http://localhost:11434/api/chat,,,false,Mistral Latest on Ollama

llama3:8b,http://localhost:11434/api/chat,,,false,Llama 3 8B

gpt-4,https://api.openai.com/v1/chat/completions,Authorization,Bearer sk-...,false,OpenAI GPT-4

CSV Columns:

model_name- Name of the model (e.g., mistral:latest, gpt-4)url- API endpoint URLauth_header- Authorization header (if required, e.g., “Authorization”)auth_value- Authorization value (e.g., “Bearer your-api-key”)think- Whether the model supports “think” parameter (true/false)description- Description of the model

Configuration

The application is configured using external files, with config.json being the primary configuration file.

Main Configuration (config.json)

This file, located in the root directory, contains all the main settings for the application:

prompts_file: Path to the file containing test prompts (e.g.,prompts.json).evaluation_weights: Points awarded for different code quality metrics.timeouts: Time limits for API requests and code execution.report_config: Settings for the generated HTML report, such as the title.colors: Color scheme used in the HTML report.

You can create your own configuration file (e.g., my_config.yaml) and use it with the --config flag during runtime.

Prompts Configuration (prompts.json)

This file contains a list of test cases (prompts) that will be sent to the language models. Each prompt is a JSON object with the following keys:

name: A descriptive name for the test (e.g., “Simple Addition Function”).prompt: The full text of the prompt to be sent to the model.expected_keywords: A list of keywords that are expected to be present in the generated code.

Automatic Configuration Generation

The system will automatically generate default configuration files (config.json and prompts.json) if they don’t exist when you run the tool. This means you can simply run the allama command without any setup, and the necessary configuration files will be created for you with sensible defaults.

Using Custom Configuration

You can run tests with a custom configuration file (in either JSON or YAML format) using the --config or -c flag. The settings from your custom file will be merged with the defaults.

Example with JSON:

allama --config my_config.json

Example with YAML:

allama --config custom_settings.yaml

Reports and Output

Allama generates comprehensive reports to help you analyze and compare model performance:

HTML Report (allama.html)

An interactive HTML report is generated after each test run, containing:

- Summary Dashboard - Overview of test results with key metrics

- Model Ranking - Performance comparison of all tested models

- Detailed Results - In-depth analysis of each model’s performance

- Code Comparison - Interactive diff viewer to compare code generated by different models

JSON Data (allama.json)

All test results are also saved in a structured JSON format for:

- Further analysis with external tools

- Integration with other systems

- Custom visualization and reporting

The JSON file contains complete information about:

- Test configuration

- Model responses

- Evaluation metrics

- Generated code

CSV Summary (*_summary.csv)

A CSV summary file is also generated with key metrics for quick analysis in spreadsheet applications.

Example Report Usage

The HTML report allows you to:

- View overall model rankings

- Examine detailed results for each model and prompt

- Compare code generated by different models using the interactive diff tool

- Filter and sort results based on various metrics

To view the report, simply open allama.html in any modern web browser after running tests.

Publishing Results Online

Allama allows you to publish your benchmark results to a central repository at allama.sapletta.com, making it easy to share and compare results with others:

# Run benchmark and publish results

allama --benchmark --publish

# Specify a custom server URL

allama --benchmark --publish --server-url https://your-server.com/upload.php

The publishing system includes:

- Rate limiting: Maximum 3 uploads per day per IP address

- Request throttling: Minimum 1 second between requests

- Automatic organization: Results are stored in timestamped directories

- Web interface: Browse and compare published benchmarks

- Responsive design: Optimized for both desktop and mobile devices

- Radar charts: Visual comparison of model performance across multiple metrics

- Badge-style metrics: Quick overview of key benchmark statistics

After publishing, you’ll receive a URL where you can view your results online.

Local Results Storage

All benchmark results are automatically saved locally in a timestamped folder structure:

data/

└── test_YYYYMMDD_HHMMSS/

├── allama.json # Complete benchmark results

├── allama.html # HTML report

└── prompts.json # Detailed prompt information

This allows you to:

- Keep a history of all benchmark runs

- Compare results over time

- Share specific benchmark results with others

Benchmark Visualization

The benchmark server provides several visualization features:

- Responsive 3-column layout: Displays benchmarks in an easy-to-scan grid (collapses to single column on mobile)

- Radar charts: Each benchmark includes a radar chart showing model performance across 6 key metrics:

- Success rate

- Response speed

- Syntax correctness

- Execution success

- Keywords presence

- Code quality

- Badge-style metrics: Key statistics displayed as GitHub-style badges for quick reference

- Model comparison: Easy visual comparison of multiple models within each benchmark

Usage

Using Makefile (recommended)

# Install dependencies and setup

make install

# Run tests

make test

# Run all tests including end-to-end

make test-all

# Run benchmark suite

make benchmark

# Test a single model (set MODEL=name)

make single-model

# Generate HTML report

make report

# Run code formatters

make format

# Run linters

make lint

Basic Command-Line Usage

# Run all tests with default configuration

allama

# Run benchmark suite

allama --benchmark

# Test specific models

allama --models "mistral:latest,llama3:8b,gemma2:2b"

# Test a single model

allama --single-model "mistral:latest"

# Compare specific models

allama --compare "mistral:latest" "llama3:8b"

# Generate HTML report

allama --output benchmark_report.html

# Run with verbose output

allama --verbose

Advanced Usage

# Run with custom configuration

allama --config custom_config.json

# Test with a specific prompt

allama --single-model "mistral:latest" --prompt-index 0

# Set request timeout (in seconds)

allama --timeout 60

Evaluation Metrics

The system evaluates generated code based on the following criteria:

Basic Metrics (automatic)

- Correct Syntax - whether the code compiles without errors

- Executability - whether the code runs without runtime errors

- Keyword Matching - whether the code contains expected elements from the prompt

Code Quality Metrics

- Function/Class Definitions - proper code structure

- Error Handling - try/except blocks, input validation

- Documentation - docstrings, comments

- Imports - proper library usage

- Code Length - reasonable number of lines

Scoring System

- Correct Syntax: 3 points

- Runs without errors: 2 points

- Contains expected elements: 2 points

- Has function/class definitions: 1 point

- Has error handling: 1 point

- Has documentation: 1 point

- Maximum: 10 points

Ansible Configuration

Create tests/ansible/inventory.ini with:

[all]

localhost ansible_connection=local

API Integration Examples

Ollama (local)

llama3:8b,http://localhost:11434/api/chat,,,false,Llama 3 8B

OpenAI API

gpt-4,https://api.openai.com/v1/chat/completions,Authorization,Bearer sk-your-key,false,OpenAI GPT-4

Anthropic Claude

claude-3,https://api.anthropic.com/v1/messages,x-api-key,your-key,false,Claude 3

Local Server

local-model,http://localhost:8080/generate,,,false,Local Model

Project Structure

allama/

├── allama/ # Main package

│ ├── __init__.py # Package initialization

│ ├── main.py # Main module

│ ├── config_loader.py # Configuration loading and generation

│ └── runner.py # Test runner implementation

├── tests/ # Test files

│ └── test_allama.py # Unit tests

├── models.csv # Model configurations

├── config.json # Main configuration (auto-generated if missing)

├── prompts.json # Test prompts (auto-generated if missing)

├── pyproject.toml # Project metadata and dependencies

├── Makefile # Common tasks

└── README.md # This file

Example Output

After running the benchmark, you’ll get:

- Console Output: Summary of test results

- HTML Report: Detailed report with code examples and metrics

- CSV/JSON: Raw data for further analysis

Getting Help

If you encounter any issues or have questions:

- Check the issues page

- Create a new issue with detailed information about your problem

Contributing

Contributions are welcome! Please read our Contributing Guidelines for details on how to contribute to this project.

License

This project is licensed under the MIT License - see the LICENSE file for details.

Acknowledgments

- Thanks to all the open-source projects that made this possible

- Special thanks to the Ollama team for their amazing work